In this blog post, we'll delve into the various testing configurations we've conducted for xiRAID, a software RAID solution by Xinnor. Our goal is to showcase the exceptional performance and capabilities of xiRAID across different scenarios, emphasizing its advantages and versatility. This guide will help you select the optimal configuration for your testing needs, ensuring that you can fully leverage xiRAID’s potential in your environment.

This guide covers six key testing configurations for xiRAID solutions. We begin with local RAID testing using xiRAID Classic, designed for high-performance applications running directly on servers. Next, we explore two Lustre configurations: a cluster-in-a-box solution and a disaggregated setup, both leveraging xiRAID Classic for enhanced performance and scalability. The fourth configuration focuses on a high-speed NFS solution using xiRAID Classic, ideal for small installations requiring top performance. Moving to xiRAID Opus, we examine a disaggregated NFS solution that virtualizes storage resources across multiple nodes. Finally, we look at optimizing database performance in virtual environments using xiRAID Opus, showcasing its capabilities in handling complex, transactional workloads. Each configuration is presented with detailed hardware requirements, testing methodologies, and expected performance outcomes.

I. xiRAID Classic

Let's start with an overview of Xinnor’s xiRAID Classic. xiRAID Classic is the only software RAID engine specifically optimized for NVMe devices, making it the best option for running performance-hungry applications directly on servers. These include:

- Relational Databases

- Time-series Databases

- Data Capturing

- Cybersecurity Applications

- All-Flash Array Backup Targets

When compared to traditional mdraid and hardware RAID solutions, xiRAID Classic delivers superior performance, particularly in write operations and degraded reads. This makes it an ideal choice for applications requiring high-speed data access and processing.

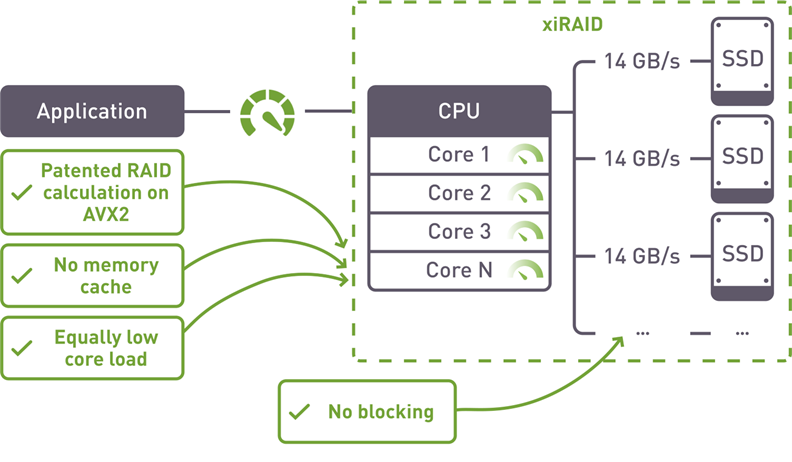

xiRAID Classic also boasts several key advantages, including:

- Exceptional performance in both normal and degraded modes

- CPU Affinity, ensuring efficient CPU usage

- Auto-tuning for sequential workloads

- Support for advanced RAID configurations like RAID 7.3 and RAID N+M

- Lockless datapaths

- AVX

We’ve conducted several configuration tests to further demonstrate the capabilities of our RAID solution. In the sections that follow, we'll explore the various testing configurations we’ve employed, providing insights into how xiRAID performs under different conditions.

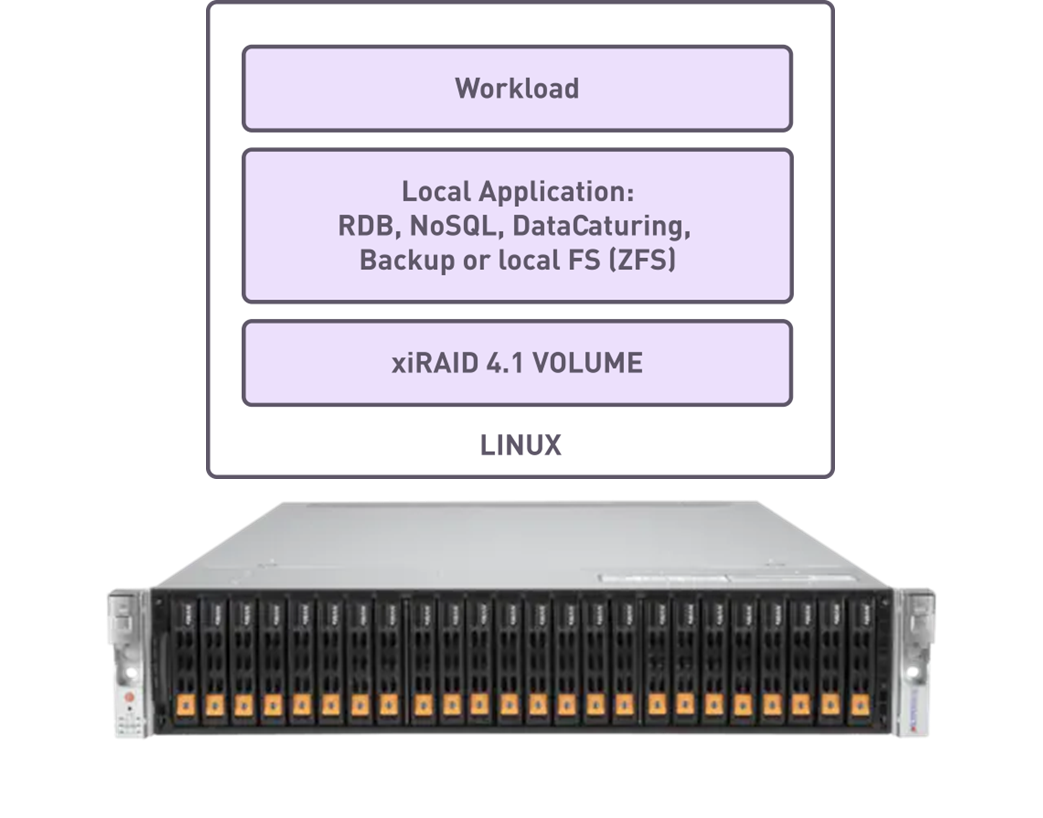

1. Local RAID Testing with xiRAID Classic

In one of the testing configurations, the focus is on Local RAID Testing using xiRAID Classic. This setup involves a single server equipped with 4 to 24 NVMe drives, utilizing a local application to leverage the high-performance volume, and a workload generator to stress test the system.

Testing Workflow

The testing process for local RAID configurations is structured as follows:

- Install RAID Software

- Create RAID Arrays: Configure the RAID arrays using the NVMe drives, selecting the appropriate RAID levels for testing.

- Install Application

- Run Workload

- Test Specialized Functions: Evaluate specialized xiRAID functions such as CPU Affinity, auto-tuning, and advanced RAID levels like RAID 7.3.

The goal of this testing is to ensure that xiRAID delivers superior performance, surpassing traditional RAID solutions, and providing a high level of customer satisfaction. Beyond the primary performance tests, additional evaluations are conducted to assess xiRAID's advanced features and capabilities.

Hardware and RAID Configuration Requirements

For optimal testing of xiRAID Classic, the following hardware and RAID configurations is used:

Hardware Requirements:

- NVMe Drives: A minimum of 4 NVMe drives, though 8 or more drives are recommended.

- CPU Cores: 2 CPU cores per NVMe drive.

- RAM: 32+ GB of RAM.

RAID Configuration:

- RAID Levels: RAID5, RAID6, RAID50, RAID 7.3.

- Special Features: CPU Affinity and AutoMerge is enabled to optimize performance.

Testing Methodologies

When evaluating xiRAID, a comprehensive approach to testing is recommended, incorporating both synthetic benchmarks and real-world scenarios:

-

Fio Benchmarks:

- 4k Random Read/Write: Use random data blocks to assess how efficiently the RAID configuration handles random access workloads.

- FullStripe Sequential Read/Write: Test performance with large, sequential data blocks to determine how well the RAID arrays manage continuous data streams.

- 1M Sequential Write: Perform 1M sequential writes, ideally with Adaptive Merge enabled.

- Degraded Reads: Evaluate the system's resilience by testing performance during both sequential and random reads under degraded conditions.

-

Application-Specific Tests:

- To gain a deeper understanding of how xiRAID Classic performs in real-world scenarios, consider conducting application-specific benchmarks using tools like pgbench, KxSystem Nano, MLPerf Storage, and sysbench. These tests simulate practical application performance, offering valuable insights into how xiRAID Classic handles everyday workloads.

- For a comprehensive evaluation, compare the results of these tests against other RAID solutions, such as mdraid. It's especially useful to test in degraded modes to emphasize xiRAID’s superior performance under failure conditions, ensuring it outperforms the competition in critical situations.

Expected Results:

When configuring RAID with xiRAID, you can anticipate the performance to reach between 90% to 95% of the total combined drive performance, factoring in the inherent RAID penalty.

How Does RAID Penalty Affect Performance?

Understanding how RAID configurations impact performance is crucial for optimizing your storage solutions. Below, we break down the expected performance impacts of different RAID operations:

-

4K Random Read:

- 100% of total RAID performance matches all drives' combined performance.

-

4K Random Write:

- 50% of total drives' performance for RAID5 (25% for a 50/50 read-write mix).

- 33.3% for RAID6 (16.6% for a 50/50 read-write mix).

-

Full Stripe Sequential Read:

- 100% of total RAID performance matches all drives' combined performance.

-

Full Stripe Sequential Write:

- (N−P)/N×100%, where N is the number of drives in the RAID array, and P is the number of parity drives.

References

- xiRAID Classic Documentation

- Blog post “Performance Guide pt.1: Performance Characteristics and How It Can Be Measured”

- Blog post “Performance Guide pt.2: Hardware & Software Configuration”

- Blog post “Performance Guide pt.3: Setting up and Testing RAID”

- Blog post “Tuning ZFS and Testing xiRAID as Replacement for RAIDZ”

- Blog post “The Most Efficient RAID for NVMe Drives”

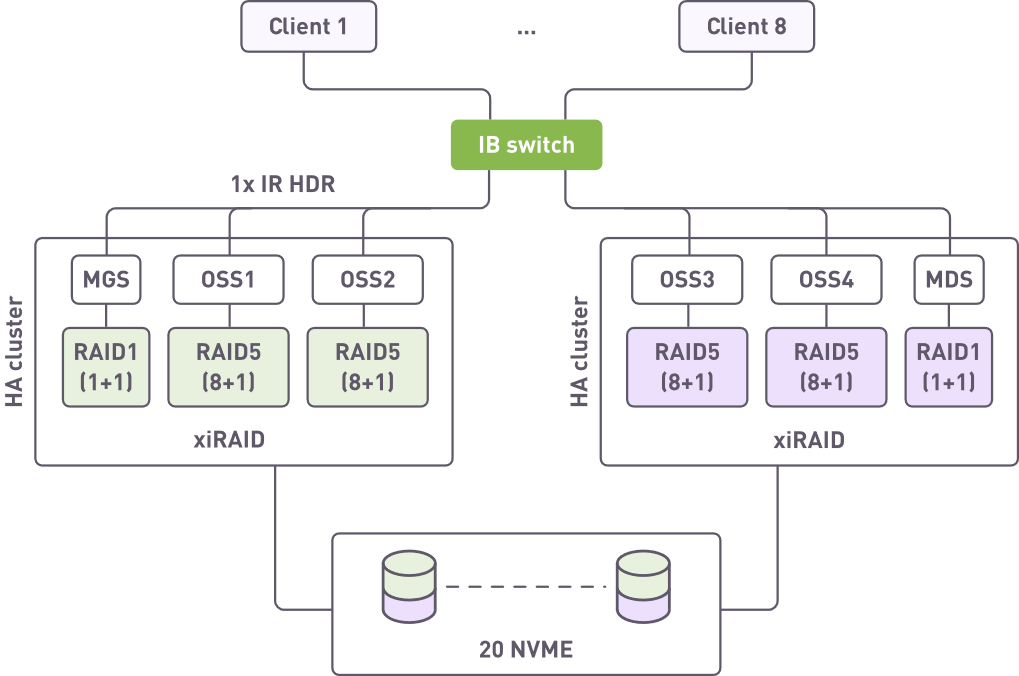

2. Cluster-in-the-box Lustre Solution with xiRAID Classic

The cluster-in-box Lustre Solution, powered by xiRAID Сlassic is engineered to deliver exceptional performance, straightforward deployment and high availability (HA). This configuration is particularly advantageous for environments requiring robust protection against drive and node failures.

With xiRAID classic, you can expect performance that is 2-4 times faster than traditional ZFS on sequential workloads, and an impressive 200 times faster on small block asynchronous I/O operations. This makes it a standout choice for high-demand applications. Moreover, xiRAID classic is uniquely positioned as the only third-party RAID solution that supports HA in Lustre environments, ensuring continuous availability and data integrity.

Testing Setup and Workflow

The goal of this is to showcase xiRAID Classic’s exceptional performance in a clustered environment. In this setup, a dual-controller is utilized, achieving over 100 GBps of read/write throughput on Lustre from just a 2U setup, delivering performance which is twice as fast as the leading competitor.

Minimal Requirements for Testing:

- Hardware: One dual-controller node equipped with 20 PCIe5 drives.

- Configuration: The system is designed to support the combination of Management Server (MGS), Metadata Server (MDS), and Object Storage Server(OSS) roles within a single chassis, with high availability mode enabled.

Testing Workflow:

- Install RAID Software.

- Install Lustre Server Components.

- Client Setup: Install client software on eight separate machines and configure the LNET network.

- Configure RAIDs and HA Module.

- Run Tests: Conduct performance tests to evaluate the system's throughput and responsiveness.

- Node Failover Tests: Perform node failover tests to ensure system resilience and continued operation under failure conditions.

Hardware Configuration

The hardware configuration can be outlined as follows:

Server Side:

- Server Side:

- At least 1 HA system.

- 20-24 PCIe5 drives.

- 2 CPU cores per NVMe drive per node.

- 128GB+ RAM per node.

- 3x400Gbit network cards.

Client Side:

- Eight clients, each equipped with 1x400Gbit card, 16 CPU cores, and 32GB RAM.

RAID Configuration

For optimal performance in this setup, the NVMe drives should be split into two namespaces, each tailored for specific roles within the Lustre environment:

RAID1 for MDS/MGS: The MDS and MGS roles should be configured with RAID1, utilizing a 1+1 setup, resulting in a total of four namespaces dedicated to these critical functions.

RAID6 or RAID5 for OSS: The OSS roles can be configured with either RAID6 or RAID5, depending on your redundancy needs:

- RAID6: Configured as 4x(8+2), offering redundancy with two parity drives.

- RAID5: Configured as 4x(8+1), providing redundancy with a single parity drive.

Testing Methodologies

To thoroughly evaluate the performance and resilience of the setup, several testing methodologies can be employed:

- IOR 1M DIO Reads and Writes: Assess large, sequential data transfers.

- Fio (Client-Server Mode with libaio): Test random and sequential I/O operations across the network.

- 4k Random Read/Write: Evaluate small, non-sequential data block performance.

- FullStripe Sequential Read/Write: Measure handling of large, continuous data streams.

- Degraded Mode and Node Fail: Test system resilience under stress and failure scenarios.

Expected Results

In a test environment with one HA server and eight clients, you can anticipate achieving approximately 120 GBps for both sequential read and write operations. For workloads involving small blocks, the system is expected to deliver around 5 million IOPS.

References

- Blog post “Raising data availability with xiRAID and Pacemaker. Part 1”

- Blog post “Raising data availability with xiRAID and Pacemaker. Part 2”

- Lustre Acceleration with xiRAID

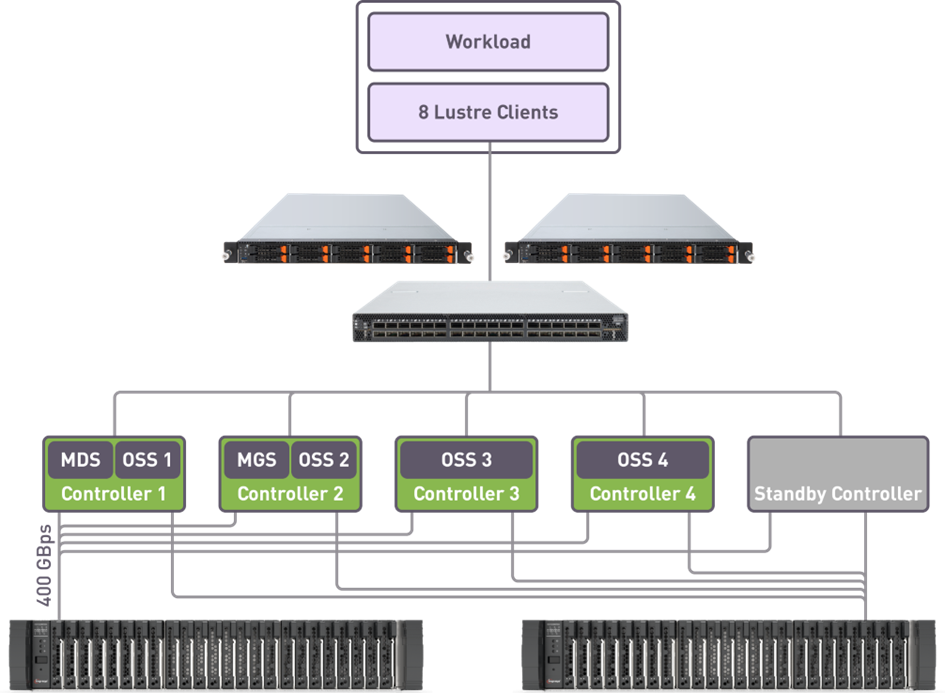

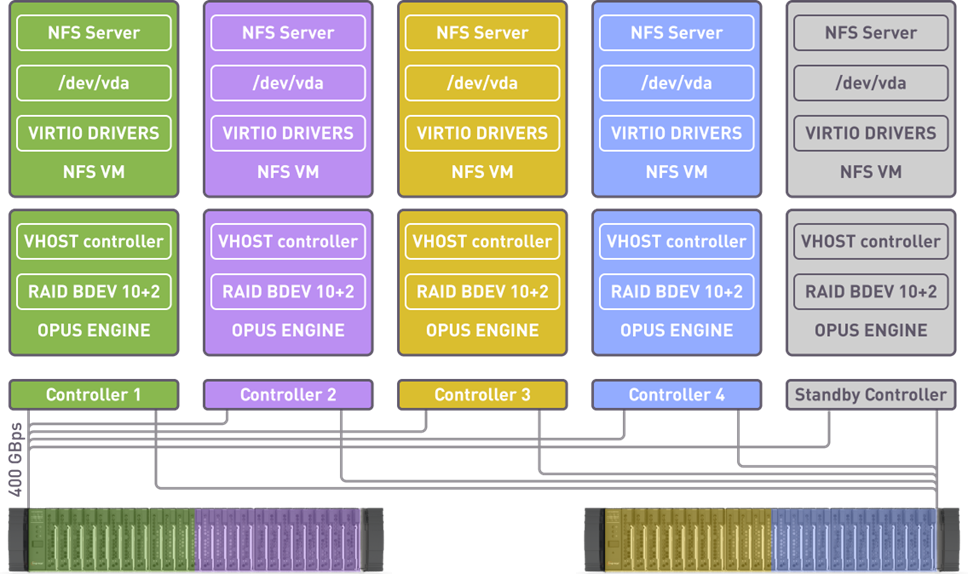

3. Disaggregated Lustre Solution Testing with xiRAID Classic

This section focuses on the Disaggregated Lustre Solution, utilizing xiRAID Classic to create a high-performance, scalable storage cluster. Unlike traditional 1+1 clusters, this disaggregated N+1 architecture is designed to maintain performance even in the event of node failures. This design not only enhances scalability but also reduces redundancy, offering a more efficient solution for large-scale storage needs.

Testing Setup and Workflow

The objective of this setup is to validate xiRAID Classic’s performance, resilience, and scalability within a disaggregated storage environment. The system is configured to handle various setups and failure scenarios, ensuring robust operation under real-world conditions.

Minimal Requirements for Testing

At least five storage nodes and two external EBOFs.

In a 4+1 configuration, if one of the four active controllers fails, the standby controller seamlessly takes over, ensuring continuous and smooth operation.

Testing Workflow:

- Install RAID Software.

- Install Lustre Server Components.

- Client Setup: Install and configure LNET client software on separate machines.

-

Configure RAIDs and HA:

- Split drives into two namespaces.

- Configure four namespaces for MGS and four namespaces in RAID10 for MDS.

- Set up two RAID 6 (8+2) arrays for each OSS.

- Enable auto merge to optimize performance.

- Run Tests.

- Failover Testing: Perform node failover tests to ensure the system maintains performance and data integrity under failure conditions.

Hardware Configuration

The testing environment includes the following hardware setup:

Server Side:

- At least five storage nodes.

- 2 CPU cores per NVMe drive per node.

- 128GB+ RAM per node.

- 1x400Gbit network card per node.

- 2 EBOFs, providing a total network capacity of 800Gbps.

Client Side:

-

Eight clients, each configured with:

- 1x400Gbit network card.

- 16 CPU cores.

- 32GB RAM.

RAID Configuration

The NVMe drives in this setup are split into two namespaces, each optimized for specific roles within the Lustre environment:

- RAID10 for MDS/MGS: The MDS and MGS are configured with RAID10, using a 1+1 setup, which results in four namespaces dedicated to these critical functions.

- RAID5/6 for OSS: The OSS is configured with either RAID6 (8+2) or RAID5, depending on the desired level of redundancy. This setup ensures robust data protection while maximizing storage efficiency.

Testing Methodologies

To thoroughly evaluate the performance and resilience of the Disaggregated Lustre Solution with xiRAID Classic, several testing methodologies are recommended. These tests are designed to simulate real-world scenarios and assess the system's capabilities under various conditions:

- IOR 1M DIO Reads and Writes: Test large, sequential data transfers.

- Fio (Client-Server Mode with libaio): Assess random and sequential I/O over the network.

- 4k Random Read/Write: Evaluate small block performance.

- FullStripe Sequential Read/Write: Measure large, continuous data stream handling.

- Degraded Mode & Node Fail Testing: Test system resilience under stress and failures.

Expected Results

With the described configuration, the disaggregated Lustre solution utilizing xiRAID Classic is expected to deliver the following performance benchmarks:

- Sequential Read/Write: The system is anticipated to achieve approximately 150GBps for sequential reads and 120GBps for sequential writes, showcasing its ability to handle large data transfers efficiently.

- Small Block Performance: The setup is expected to manage around 6 million IOPS, reflecting its capacity to efficiently process high volumes of small, random I/O operations.

- Failover Performance: During failover scenarios, the system is expected to maintain performance without degradation, ensuring continued operation and data availability even in the event of node failures.

References

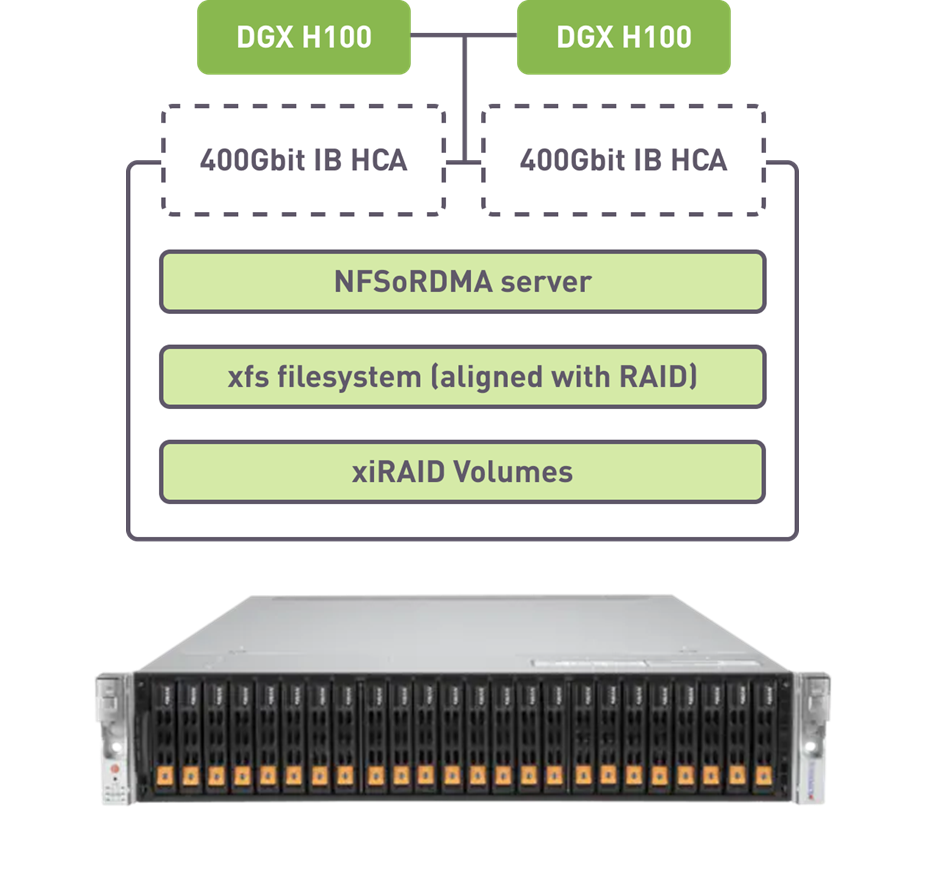

4. NFS Solution Testing with xiRAID Classic

This segment details the NFS solution using xiRAID Classic, designed to deliver extremely fast NFSv3 performance. With its plug-and-play capability, this setup is particularly suited for small installations that demand high performance with minimal complexity.

Testing Setup and Workflow

The goal is to validate the high-speed performance of the NFS solution and its ease of deployment. This can be achieved by utilizing a single server setup equipped with 8-24 NVMe drives, along with a network setup involving 2-4 clients connected over a 200-400 Gbit network.

Testing Workflow:

- Install RAID Software: Start by installing xiRAID on the server to manage the NVMe drives.

- Create Local RAIDs and Align File System: Configure local RAID arrays and align the file system for optimal performance.

- Configure NFSv3 Server.

- Configure NFSv3 Clients.

- Run Tests: Conduct performance tests to measure read and write speeds, as well as system resilience under different conditions.

Hardware Configuration

The recommended hardware setup for this testing is as follows:

Server Side:

- A single system equipped with 8-24 NVMe drives.

- 2 CPU cores per NVMe drive to handle intensive I/O operations.

- 128GB+ RAM to support high-performance operations.

- 1 or 2x400Gbit network cards for high-speed connectivity.

Client Side:

-

2 or 4 clients, each configured with:

- 1x400Gbit network card.

- 16 CPU cores.

- 32GB RAM.

RAID Configuration

The NVMe drives in this setup are configured to optimize both data storage and file system journaling:

- RAID50 for Data: The primary data storage is configured with RAID50, combining the benefits of striping (RAID0) and parity (RAID5) for enhanced performance and data protection.

- RAID1 for File System Journal: The file system journal is configured with RAID1, providing mirrored redundancy to ensure data integrity and quick recovery in case of a drive failure.

Testing Methodologies

For this NFS solution, the following testing methodologies are recommended to comprehensively evaluate performance:

- Fio in Client-Server Mode with libaio: Utilize Fio in a client-server configuration using the libaio engine to test both random and sequential I/O operations. This approach provides a detailed understanding of the system’s performance across the network.

- 4k Random Read/Write: Execute 4k random read and write tests to assess how well the system handles small, non-sequential data blocks, which are common in real-world applications like databases.

- 1M Sequential Read/Write: Conduct 1M sequential read and write tests to evaluate the system’s efficiency in handling large, continuous data transfers, which are crucial for workloads like media streaming or large file transfers.

- Testing in Degraded Mode: Perform tests under degraded conditions to ensure the system maintains performance and data integrity even when components fail or under stress.

Expected Results

The NFS solution with xiRAID Classic should deliver approximately 45 GBps for reads and 30 GBps for writes with 2 clients, and scale to 90 GBps for reads and 60 GBps for writes with 4 clients.

References

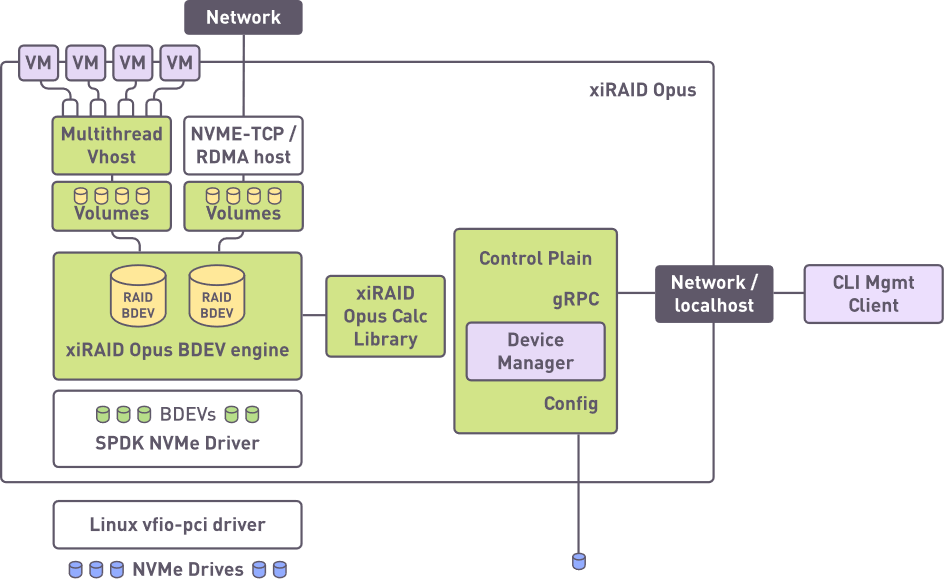

II. xiRAID Opus

xiRAID Opus is a cutting-edge storage engine designed specifically for disaggregated devices. It is a software RAID engine operating in user space, ideal for network-attached NVMe and for virtualization. In this case, Xinnor started from the SPDK libraries and implemented its own RAID calculation algorithm to achieve the best possible performance with minimal system resources load. Furthermore, xiRAID Opus boasts a broader array of integrated functionalities, including built-in features such as NVMe initiator, NVMe over TCP/RDMA and Vhost controller. These features facilitate direct connectivity to modern storage technologies, without relying on external components, enhancing efficiency and flexibility in networking and virtualization scenarios.

5. Disaggregated NFS Solution Testing with xiRAID Opus

In this section, we will outline the testing workflow, requirements, and methodologies for evaluating a disaggregated NFS solution using xiRAID Opus.

Testing Setup and Workflow

The goal of this testing is to assess the performance and resilience of a disaggregated NFS solution powered by xiRAID Opus. The setup demonstrates how xiRAID Opus virtualizes storage resources, simplifies data management, and maintains high performance, even under failure conditions.

Testing Workflow:

- Install Opus Software:

- Create NFS Server VMs.

- Configure RAIDs and Pass Them to VMs:

- Configure NFS Server Components.

- Install Client Software and Configure.

- Run Tests: Conduct a series of performance tests to assess the throughput, latency, and overall efficiency of the NFS solution.

- Perform Failover Tests: Simulate failover scenarios to evaluate the system's ability to maintain performance and data availability during node failures.

In a 4+1 configuration, if one of the four active controllers fails, the standby controller seamlessly takes over, ensuring continuous and smooth operation.

Hardware Configuration

For this disaggregated NFS solution, a robust hardware setup is necessary to support high-performance operations across multiple nodes:

-

Server Side:

- Storage Nodes: At least 5 storage nodes, each equipped with 2 CPU cores per NVMe drive and 128GB+ RAM.

- Network: Each node is connected via a 1x400Gbit network card, ensuring high-speed data transfers.

- EBOFs: Two EBOFs with a total network capacity of 800Gbps manage the networked storage devices.

-

Client Side:

-

Clients: 8 clients configured with:

- 1x400Gbit network card

- 16 CPU cores

- 32GB RAM

-

Clients: 8 clients configured with:

RAID Configuration

To optimize storage performance and ensure data protection in this disaggregated setup, the NVMe drives on each storage node are configured as follows:

- RAID6 (10+2) for Storage: Each storage node is set up with RAID6, providing a balance between storage efficiency and data redundancy. The (10+2) configuration ensures the system can tolerate up to two drive failures per array without data loss.

Testing Methodologies

To evaluate the capabilities of xiRAID Opus in this disaggregated NFS setup, the following testing methodologies are recommended:

- IOR 1M DIO Reads/Writes: Assess large, sequential data transfer performance.

- Fio, Client-Server Mode with libaio: Test random and sequential I/O across the network.

- 4k Random Read/Write: Gauge performance with small, non-sequential data blocks.

- FullStripe Sequential Read/Write: Measure efficiency in managing large data streams.

- Degraded Mode & Node Fail: Test system resilience under failure conditions.

Expected Results

Based on the hardware and RAID configurations used in this setup, the expected performance outcomes are:

- Sequential Read/Write Performance: The system is expected to achieve approximately 150GBps for sequential reads and 90GBps for sequential writes, demonstrating its capability to handle intensive data throughput.

- Failover Performance: In the event of a node failure, the system should maintain its performance without any degradation, ensuring continuous operation and data availability.

Fio Configuration for Testing

For the performance tests, the following Fio configurations is recommended:

Sequential Read/Write Test Configuration:

bs=1M

iodepth=32

direct=1

ioengine=libaio

rw=read/write

size=100G

[dir]

directory=/test

Random Read Test Configuration:

bs=4k

iodepth=128

direct=1

oengine=libaio

rw=randread

size=100G

norandommap

[dir]

directory=/test

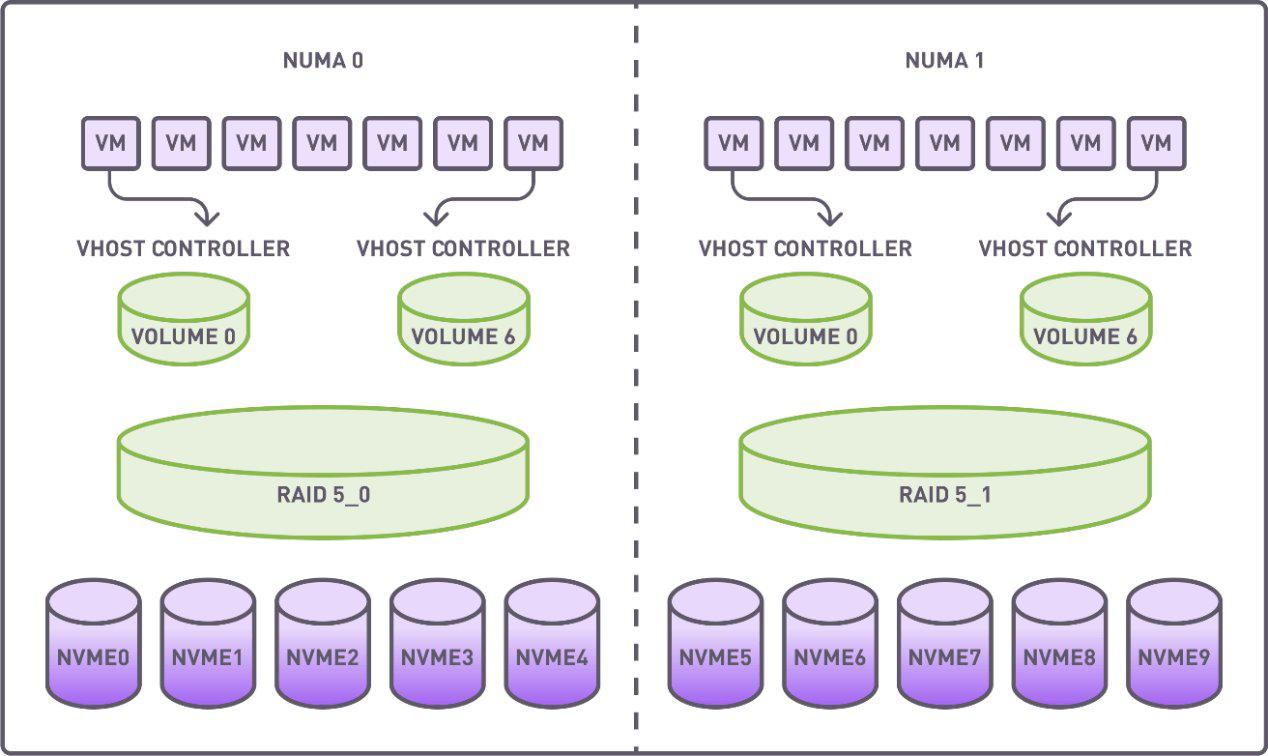

6. Database in Virtual Environment

This section provides an overview of the testing setup, hardware requirements, methodologies for assessing the performance of PostgreSQL databases in virtualized environments using xiRAID Opus.

Testing Setup and Workflow

The goal of this testing is to evaluate the efficiency and performance of PostgreSQL running on virtual machines with storage managed by xiRAID Opus. This setup highlights how xiRAID Opus optimizes database operations and ensures high performance under various workloads and conditions.

Testing Workflow:

- Install PostgreSQL on Virtual machines.

- Configure PostgreSQL and Storage.

- Create and Initialize Test Database.

- Run pgbench Tests: Use the pgbench utility to execute tests with varying numbers of clients.

- Monitor Array Performance: Utilize iostat to observe array performance during tests.

Hardware Configuration

Server Side:

- Motherboard: Supermicro H13DSH.

- Processors: Dual AMD EPYC 9534 64-Core.

- Memory: 773,672 MB.

- Storage: 10x KIOXIA KCMYXVUG3T20 drives.

Clients:

- Virtual Machines: Each with 8 vCPUs and 32 GB RAM.

RAID Configuration

The NVMe drives in this setup are configured to optimize performance and data protection:

- Two RAID (4+1) Groups: Each RAID group is set up with a 4+1 configuration to provide a balance of performance and redundancy.

- 64K Stripe Size: A 64K stripe size is used to enhance data distribution across the drives.

- Segmentation: Each RAID group is divided into 7 segments, with each segment allocated to a VM via a dedicated vhost controller.

Testing Methodologies

To evaluate the performance of the database in a virtual environment with xiRAID Opus, the following testing methodologies are recommended:

- pgbench Utility: Use the pgbench tool with the built-in scripts: tpcb-like, simple-update, and select-only to simulate various database workloads.

- Client Variation: Run tests with varying numbers of clients, from 10 to 1,000, to assess scalability under different loads.

- Worker Threads Configuration: Match worker threads to the number of VM cores to ensure optimal resource utilization during testing.

- Normal and Degraded Modes: Conduct tests in both normal and degraded modes to evaluate performance stability and resilience under failure conditions.

- Performance Monitoring: Use iostat to monitor array performance throughout the tests, providing insights into I/O operations and system throughput.

Expected Results

Based on the testing methodologies, xiRAID Opus is expected to significantly outperform mdraid across all scenarios:

- Select-Only Tests: xiRAID Opus delivers 30-40% better performance compared to mdraid.

- Degraded Mode: Performance improves dramatically, with xiRAID Opus running 20 times faster than mdraid under failure conditions.

- Simple-Update Tests: Expect xiRAID Opus to be 6 times faster than mdraid, demonstrating superior handling of update-intensive workloads.

- TPC-B Like Tests: xiRAID Opus shows 5 times the performance of mdraid, highlighting its efficiency in complex transactional environments.

References

Closing Remarks

We hope this guide has provided valuable insights into the testing configurations and capabilities of xiRAID. Whether you are setting up local RAID solutions, clustered environments, or disaggregated systems, xiRAID offers robust performance and flexibility to meet your needs. If you have any questions or need further assistance, please don't hesitate to reach out to us at request@xinnor.io. Thank you for choosing Xinnor for your storage solutions!