We use cookies to personalize your site experience.

Privacy policyDatabases

Databases play a crucial role in storing, organizing, and managing large amounts of data efficiently. As databases grow in size and complexity, ensuring data availability and protection becomes increasingly important.

RAID offers a method of combining multiple physical disks into a single logical unit, providing increased data redundancy and improved performance. It is a valuable tool in maintaining the integrity and reliability of databases, making RAID storage an indispensable solution for organizations dealing with critical data.

Learn more about how xiRAID has enhanced database performance being used in a standalone database solution (in partnership with KX), in virtualized environment (in partnership with Kioxia) and providing sustainable database performance in degraded mode (in partnership with ScaleFlux).

Standalone Database Solution

Case: KDB+ in partnership with KX

Kx provides a high-speed streaming analytics platform with kdb+, the fastest time-series database. It optimizes data ingestion, analysis, and storage for large volumes of historical and streaming data. The platform supports data capture, processing, enrichment, analytics, and interactive visualizations. Solutions built on Kx offer redundancy, fault tolerance, query capabilities, filtering, alerting, reporting, and visualizations. Its columnar design and in-memory capabilities provide speed and efficiency compared to relational databases.

Native time-series support enhances speed and performance for streaming and historical data operations. High performance storage subsystem is a must to support very large data volumes and ensure low latency query workloads.

Solution

Kx customers utilize server infrastructure with NVMe and SSD storage to ensure fast data processing and support analytics. xiRAID is specialized software for SSD and NVMe drives, maximizing their performance. Kx uses xiRAID in its R&D labs for testing IoT workloads and large data volumes. xiRAID has boosted Kx's write performance by 88%, sequential read performance by 39%, and reduced latency, enabling more data processing with current infrastructure.

Test Results

| Before (VROC) | After | |

|---|---|---|

| Total Write Rate (async) MiB/s | 3,420 | 6,440 |

| Streaming Read (mapped) Rate MiB/s | 14,100 | 19,565 |

| Streaming ReRead (mapped) Rate MiB/s | 47,905 | 72,461 |

| Random Read 1 MB (mapped) Rate MiB/s | 10,892 | 7,386 |

| Random Read 64K (mapped) Rate MiB/s | 6,992 | 3,542 |

| Random Read 1 MB (unmapped) Rate MiB/s | 8,914 | 6,485 |

| Random Read 64K (unmapped) Rate MiB/s | 5,735 | 3,050 |

Kx stores data in a columnar database, with most customer workloads involving sequential reading and writing of data. This enables Kx users to benefit from significantly higher performance in reading and writing data than available with alternative storage platforms.

Test setup

- 24 NVMe drives 2 TB each, 48 TB RAW24 NVMe drives 2 TB each, 48 TB RAW

- RAID 50 configuration with 6 RAID 5 groups

- Nano benchmark running 20 threads for read and write operations

Nano benchmark calculates basic raw I/O capability of non-volatile storage, as measured from kdb+ perspective.

Database in Virtualized Environment

Case: PostgreSQL in partnership with KIOXIA

PostgreSQL, renowned for its versatility and robustness, has become a cornerstone in various applications, from small-scale websites to large enterprise systems. However, to fully leverage PostgreSQL's capabilities, especially in demanding workloads, fast storage solutions are imperative. In partnership with Kioxia, we embarked on a quest to optimize PostgreSQL performance within virtualized environments.

The challenge lays in finding the optimal storage solution that would ensure low latency and high throughput, vital for PostgreSQL's transactional nature and ability to handle substantial datasets. Virtual Machines (VMs) offer flexibility, scalability, and cost-effectiveness, making them the preferred choice for running PostgreSQL, particularly in development and testing environments.

However, the abstraction layer introduced by virtualization can sometimes lead to performance overhead compared to running directly on bare metal. Conversely, deploying applications on bare metal often results in underutilization of CPU and storage resources, as one application typically doesn't fully leverage the server's performance. Thus, striking the right balance between virtualization and performance optimization posed a significant challenge.

Solution

To address this challenge, we conducted comprehensive performance comparisons between different storage solutions, focusing on vHOST Kernel Target with Mdadm and SPDK vhost-blk target protected by Xinnor’s xiRAID Opus.

Mdadm, a software tool in Linux systems, manages software RAID configurations, utilizing the computer's CPU and software to enhance data redundancy and performance across multiple disks. In contrast, xiRAID Opus, built upon SPDK libraries, offers a high-performance software RAID engine specifically designed for NVMe storage devices.

Utilizing the pgbench utility, we conducted tests with varying numbers of clients, reaching stability at 100 clients across all script types. Aligning worker threads with VM cores and conducting tests three times, we recorded average results across all VMs to ensure accuracy. Additionally, we assessed select-only tests in degraded mode to understand the maximum impact on database performance, continuously monitoring array performance using the iostat utility.

This comprehensive approach enabled us to identify the most efficient storage solution for optimizing PostgreSQL performance within virtualized environments, ensuring seamless data access and minimizing downtime for demanding workloads.

Test Results

| Test | 1 VM Performance | Latency the lower, the better | Total Server Performance xiRAID: 14 VMs, mdraid: 16 VMs |

|---|---|---|---|

| select-only, xiraid | 110K tps | 0.9 ms | 1540K tps |

| select-only, mdraid | 76K tps | 1.3 ms | 1216K tps |

| select-only, xiraid, degraded mode |

112K tps | 0.9 ms | 1568K tps |

| select-only, mdraid, degraded mode |

4.6K tps | 22 ms | 74K tps |

| simple-update, xiraid | 26K tps | 3.8 ms | 364K tps |

| simple-update, mdraid | 4.3K tps | 23 ms | 69K tps |

| tpc-b-like, xiraid | 21K tps | 5 ms | 294K tps |

| tpc-b-like, mdraid | 4K tps | 25 ms | 64K tps |

Test setup

Drives: 10xKIOXIA KCMYXVUG3T20

CPU: Dual AMD EPYC 9534 64-Core Processors

OS: Ubuntu 22.04.3 LTS

PostgreSQL Version: 15

VMs: 14, with each using segmented RAID volumes as storage devices

When assessing different protection schemes, performance under both normal and degraded conditions is crucial. In select-only tests, xiRAID Opus outperforms mdraid by 30-40%, with its ability to run the vhost target separately. In degraded mode, mdraid exhibits over 20 times slower performance than xiRAID, potentially leading to business losses, especially in time-sensitive sectors like online travel or trading. Moreover, mdraid's performance is six times worse than xiRAID Opus for small block writes and lags behind by five times in CPU-intensive operations like the TPC-B Like script. Overall, xiRAID ensures stable performance across multiple VMs, facilitating uninterrupted data access even during failures or heavy write loads. Additionally, its VM scalability enables administrators to consolidate servers, simplifying storage infrastructure and yielding substantial cost savings.

Read full whitepaperSustainable Database Performance in Degraded Mode

Case: Aerospike DB in partnership with ScaleFlux

ScaleFlux develops innovative storage solutions for data centers and cloud computing, with their flagship product being the CSD (Computational Storage Drive) series. The CSD series combines high-performance NVMe SSD with Hardware Compute Engines to offer a flexible, efficient storage solution. It boosts infrastructure efficiency, increases storage density, enhances compute performance, and reduces data storage costs. It combines NVMe SSD features with Hardware Compute Engines, providing a user-friendly solution that unlocks new possibilities for businesses.

ScaleFlux is evaluating the feasibility of using xiRAID in latency-sensitive distributed database applications.

Solution

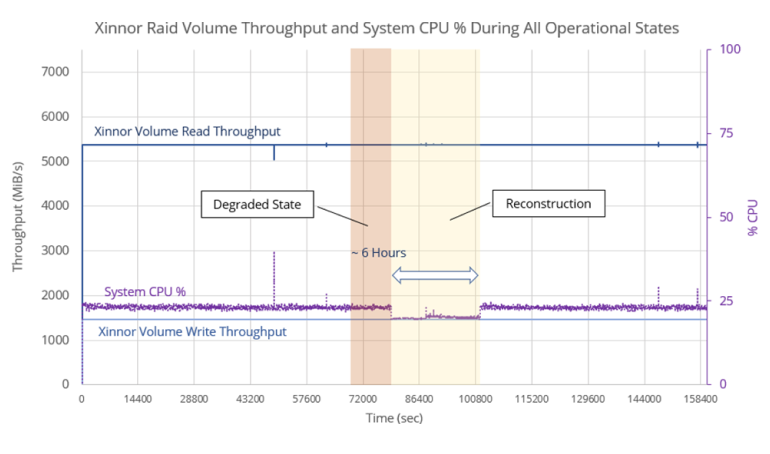

Assessing a RAID solution's performance in degraded and reconstructing states is crucial. In the degraded state, fewer drives are available for IO requests, and intra-RAID IO adds traffic as data on good disks is used for reconstruction on new disks. xiRAID allows controlled array reconstruction for predictability and efficiency. ScaleFlux CSD 3310 NVMe SSDs offer inline compression to minimize the impact of intra-RAID reconstruction IO. The combination of these technologies creates a top-tier software RAID solution for high-performance workloads in all RAID operating states.

Test Results

During the reconstruction process, there is a minor decrease in CPU utilization, but the overall read and write throughput remains steady at 5.4GB/s and 1.7GB/s, respectively.

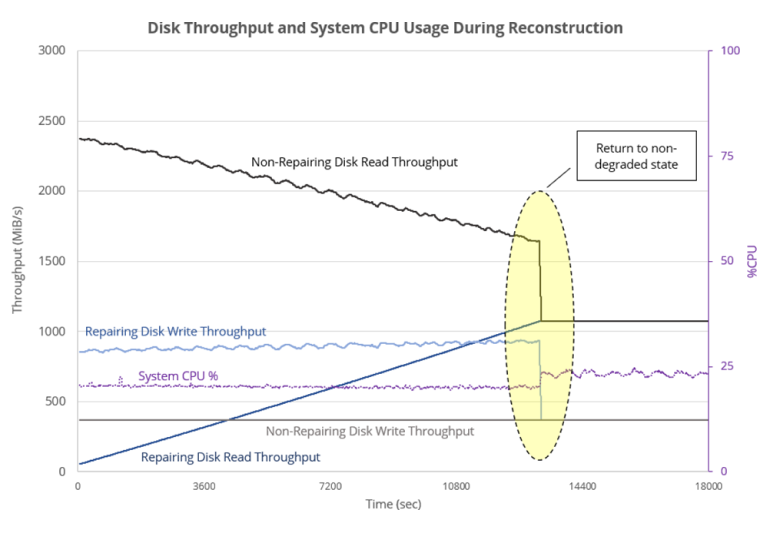

For a more comprehensive analysis of the reconstruction phase, we measured the read and write throughput of both a regular drive and the drive undergoing reconstruction.

The regular drive maintains a consistent write throughput while supporting a higher read throughput by contributing data to the reconstructing drive. As the reconstruction progresses, the amount of data read from the regular drive decreases, and by the end of the phase, both drives contribute equally to the overall workload.

Download the application note to learn more about the results:

DownloadTest setup

CPU: dual-socket Xeon Gold 6342 CPU

Cores: 48 physical cores

DRAM: 512GB

SSD: five 7.68TB ScaleFlux CSD 3310 PCIe Gen 4 SSDs

OS: Ubuntu 22

Kernel version: 5.15.0-70

Certification tool: Aerospike