We use cookies to personalize your site experience.

Privacy policyCase Studies

About KIT

Karlsruhe Institute of Technology (KIT) stands as a pioneering institution in Germany, formed through the historic merger in 2009 of the University of Karlsruhe and the Karlsruhe Research Center. This union, the first of its kind in Germany, seamlessly integrates academic and non-academic entities, breaking down traditional barriers in the scientific and research landscape.

Functioning as a national research center under the Helmholtz Association, KIT has positioned itself at the forefront of cutting-edge research. Specializing in diverse fields, the institute is committed to pushing the boundaries of knowledge and innovation. As a testament to its academic excellence, KIT is a proud member of TU9, a prestigious society comprising Germany's largest and most distinguished institutes of technology.

Challenge

HPSS (High Performance Storage System) is operated at Karlsruhe Institute of Technology (KIT) as a storage platform for archival storage on magnetic tapes mainly for the Grid Computing Center Karlsruhe (GridKa) (www.gridka.de) and the bwDataArchive (rda.kit.edu) service.

The overall system is currently based on 3 tape libraries storing more than 100 petabytes distributed over 2 sites.

Managing such large pool of data on tapes requires proper implementation of a cache for writing, aggregating writes into files of 300GB to reduce the number of tape marks, while writing to the tape at 380MB/s. At the same time, in reading, the cache is required to effectively handle Full Aggregate Return (FAR), as the request for one file triggers the recall of a full aggregate, while dealing with a tape drive read speed of 400MB/s. When implementing a cache, writing to tapes implies 2 reads and 1 write, while reading from the tapes implies 1 read and 1 write to the cache.

Options

At first, KIT tried a cache implementation based on2 NetApp E5700 with 120x 8TB HDD each, for 1.92PB raw and 1.8PB usable capacity.

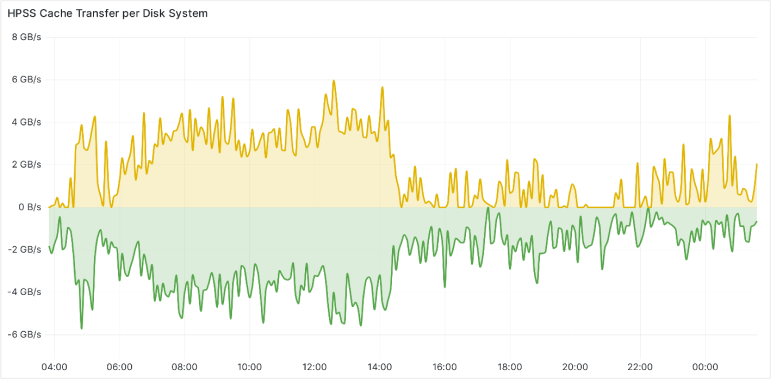

Based on the specification of this storage solution, KIT expectations were to read from the cache at a speed of 12GB/s per system with 70% read workload. Unfortunately, the performance which was achieved fell well behind original expectations: the measured peak performance in read and write was just 6GB/s per system, with the average speed at around 2GB/s. It turned out that the original definition of the workload did not take into account, that the tape drives expect constant streams above 300MB/s, which could not be achieved due to the high random write and read workload of clients writing to the hard drives. In addition, the storage systems prioritized writes over reads, starving the streams toward the tape drives.

In order to cope with the mostly random workload, the next natural step was to test a system based on SSDs. Indeed, in a second attempt, KIT tested 2 Dell Powervault ME5024 with 2 extension enclosures with 48x 3.84TB SSDs each, resulting in around 250TB usable capacity.

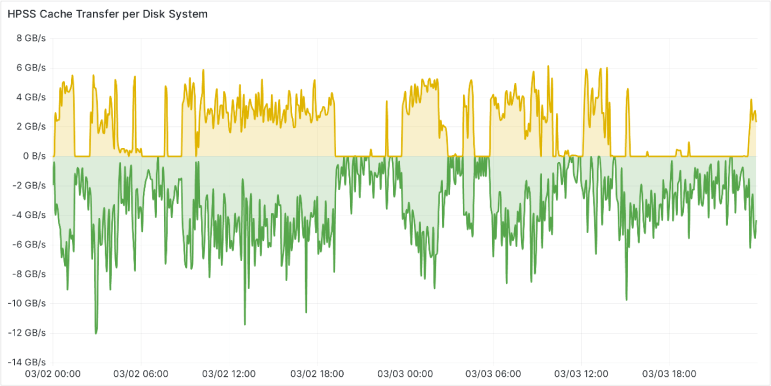

The solution based on SSDs provided lower latency and consequently an improvement in the write speed to the tapes. On the other hand, the throughput was still limited by the capabilities of the controllers used inside this system. The measured write peak performance was around 5GB/s and 12GB/s in read, with the average speed to tape of about 3GB/s write and 5GB/s read.

Solutions

From the first 2 trials, KIT understood that the optimal cache required low latency, high throughput and storage redundancy. These requirements call for typical All Flash Arrays. Unfortunately, AFAs from OEMs are quite expensive, so KIT did an additional experiment by evaluating a solution based on NVMe SSDs and xiRAID, the software RAID developed by Xinnor to handle the performance of NVMe drives.

This simple setup is centered around a standard server based on AMD Epyc 9954P processor with 64 cores, 512GB of DRAM, 10x 30TB Micron 9400 NVMe SSDs, 4x100Gbit/s Ethernet links and last but not least, Xinnor’s xiRAID in RAID6 with several logical volumes (LV). After the double parity RAID implementation, the usable capacity was 240TB. This RAID implementation can survive the failure of 2 drives out of 10, giving peace of mind in terms of reliability and data integrity.

Test Results

Test setup:

- 2U Supermicro AS 2015CS TNR

- Single AMD EPYC 9554P 64-Core 3.1GHz

- 512GB RAM

- 10x 30TB Micron 9400 NVMe devices (7GB/s)

- 4x 100Gbit/s Ethernet

- xiRAID Level 6 with several regular Logical Volumes on top

fio benchmarks with different block sizes/file sizes/number of clients with constant 2:1 read:write ratio:

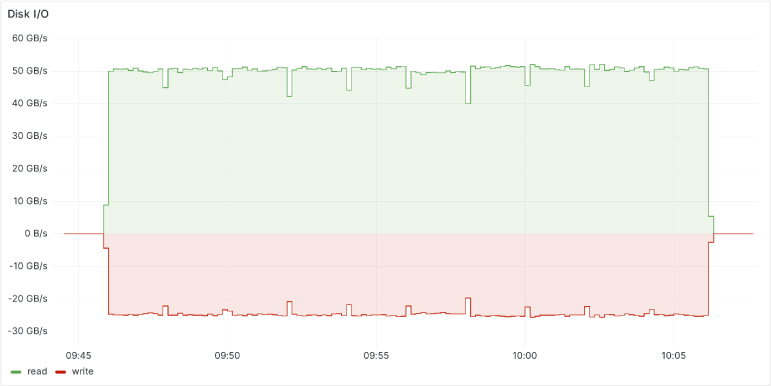

The results were constantly above 50GB/s in read and 25GB/s in write, drastically outperforming the other 2 solutions.

Download the presentation to learn more about the KIT testing: https://doi.org/10.5445/

Conclusion

In conclusion, the tests conducted by KIT to find the optimal cache for their Tape libraries showcase the effectiveness of a solution based on a standard x86 server, equipped with local NVMe storage and protected by Xinnor’s xiRAID. This solution exhibits remarkable and stable performance at low power and at a price point significantly lower than the alternatives tested by KIT. xiRAID is capable of providing data integrity, while delivering the performance needed to effectively operate the cache tier.