We use cookies to personalize your site experience.

Privacy policyTechnology Partners

About

DapuStor Corporation, founded in April 2016, is a leading expert in high-end enterprise solid-state drives (SSD), SOC, and edge computing-related products. With world-class R&D strength and over 380 team members, it has comprehensive capabilities from chip design and product development to mass production. Its products have been widely used in servers, telecom operators and data centers.

Solution

The NVMe SSD is designed and built on the technology with much high speed and low latency. It is an ideal solution for core data storage scenarios in different fields, such as enterprise IT and AI. On the other hand, the industry has demands on the reliability of such cutting-edge product as well, and RAID plays an important part in such field. In this test, Dapustor R5102 eSSD is combined with xiRAID to analyze the performance of the RAID group.

Configuration

The test server had the following specification

Server: Tencent Starlake

CPU: AMD EPYC 7352 24-Core

RAM: 16/3200 MHz x16 units

Storage Drives: 4.18.0-305.el8.x86_64

OS: Centos 8.5.2111 kernel 5.4.244-1.el8.elrepo.x86_64

Four disks in the test server are used to test. All of them belong to a same NUMA node with each 2 disks attached to a same root complex. The disks are formatted to 4K block size before tests.

Test Method

The procedure of the tests is as follows. First, a RAID group, either RAID 5 or RAID 6, is constructed and initialized. Then it is preconditioned by writing the entire RAID block device two times. After that the random read and write test are carried out subsequently. For the random write test, it is performed for more than 2 hours in order to achieve the steady state of all the SSD within the RAID group.

The random write performance of a RAID group is evaluated against the baseline. We take 75% of the IOPS value obtained from the raw disks test as the baseline for RAID 5, and 50% of that for RAID 6. Because 3 of 4 disks are used to place effective data upon each writing for RAID 5, the maximum IOPS is 75% (3/4) of that of raw disks in theory. Similarly, it is 50% for RAID 6. However, for random read test, we always take 100% of the IOPS value from raw disk test as the baseline since there is no overhead on parity code calculation and placement.

Conclusion

From our test results, it shows that the IOPS of RAID 5 and RAID 6 is 45%+ of that on raw disks in random write tests, and 97%+ in random read tests. Given the overhead taken on parity code calculation and placement, this result is promising.

Linux mdraid is also tested as an alternative method to construct RAID. It is obvious that the performance of xiRAID is much better than mdraid. And it is observed that the CPU utilization of xiRAID is evenly distributed during the randwrite test, of which mdraid is not capable.

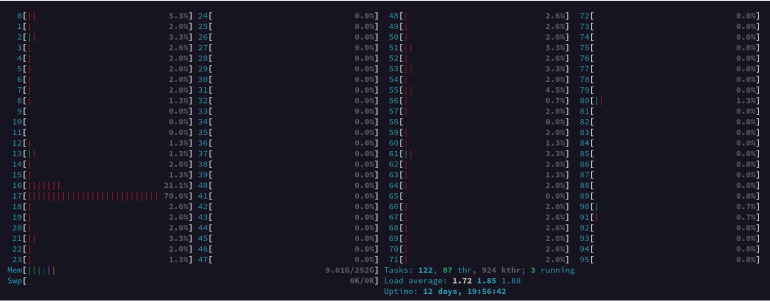

CPU utilization during random write test on xiRAID:

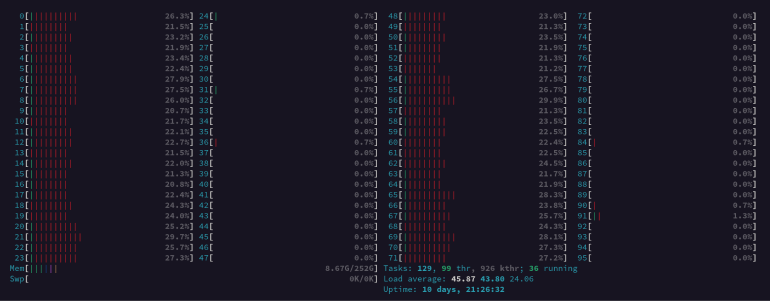

CPU utilization during random write test on mdraid: