On Nov. 10, AMD officially released its 4th generation EPYC server processors, codenamed Genoa. In this post, we'll talk about how this release affects the storage architecture and our plans.

Let's start with the main features:

More cores will be useful in building high performance All-Flash arrays. We still recommend having 2 cores per NVMe drive and still prefer single-socket configurations. The performance of the cores is increasing, but the performance of the drives will also increase significantly with the move to PCIe Gen5.

Samsung PM1743, for example, promises us 13GBps and 2.5M IOps for reads. Write performance approaches 7GBps. A basic RAID5 volume of 4 drives can theoretically sustain 50GBps/10MIOps reads and 20GBps writes.

And if we remember that there are monstrous solutions for 48+ drives, it becomes clear that the power of many cores will be needed.

In addition to the backend performance increase, we got a proportional increase in the performance of the network controllers. NVIDIA Connect-X 7 SmartNICs give a performance of over 40GBps per adapter.

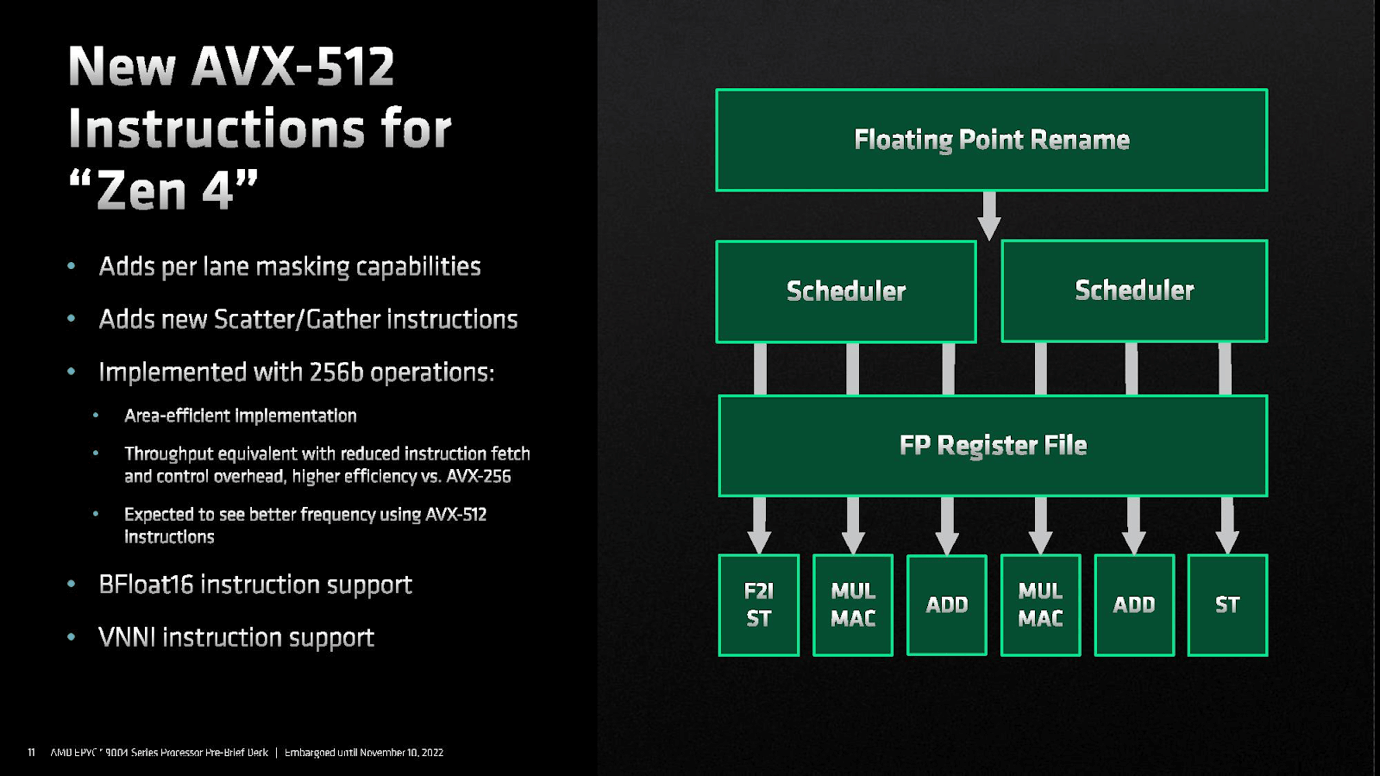

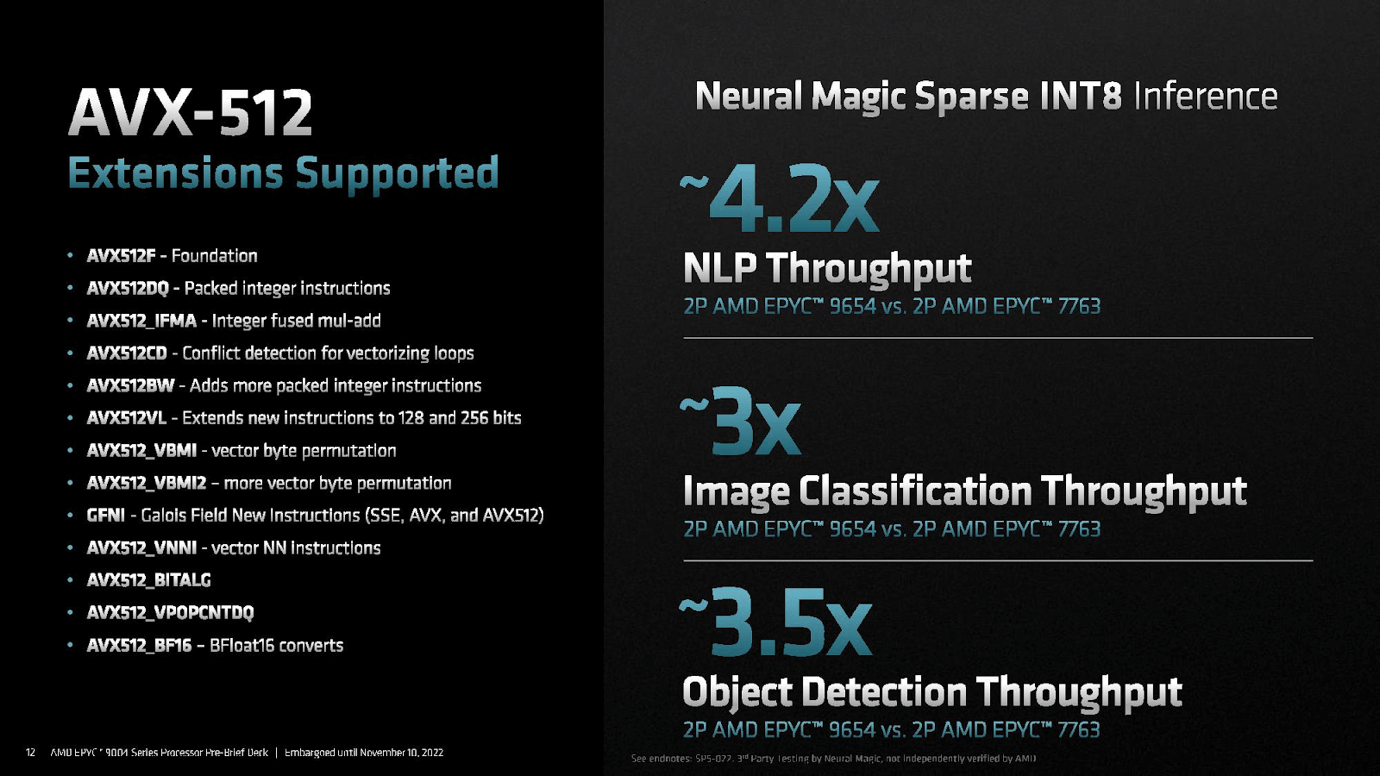

As a result, we are getting very powerful hardware and not every piece of software will be able to fully utilize it. Luckily, we see support for AVX-512 in Zen 4 microarchitecture, which will likely increase performance of some storage services. However, AMD has its own approach and uses 256b datapath.

On top of core performance increase, now in a two-socket configuration we can have up to 12TB of memory. That’s enough to house a sizeable database entirely in DRAM and periodically dump changes to non-volatile Flash.

Overall, we see a significant increase in performance on tasks that use HPC infrastructure. This, in turn, increases the performance requirements of the storage systems that supply the cluster with data and are used for checkpoint operations.

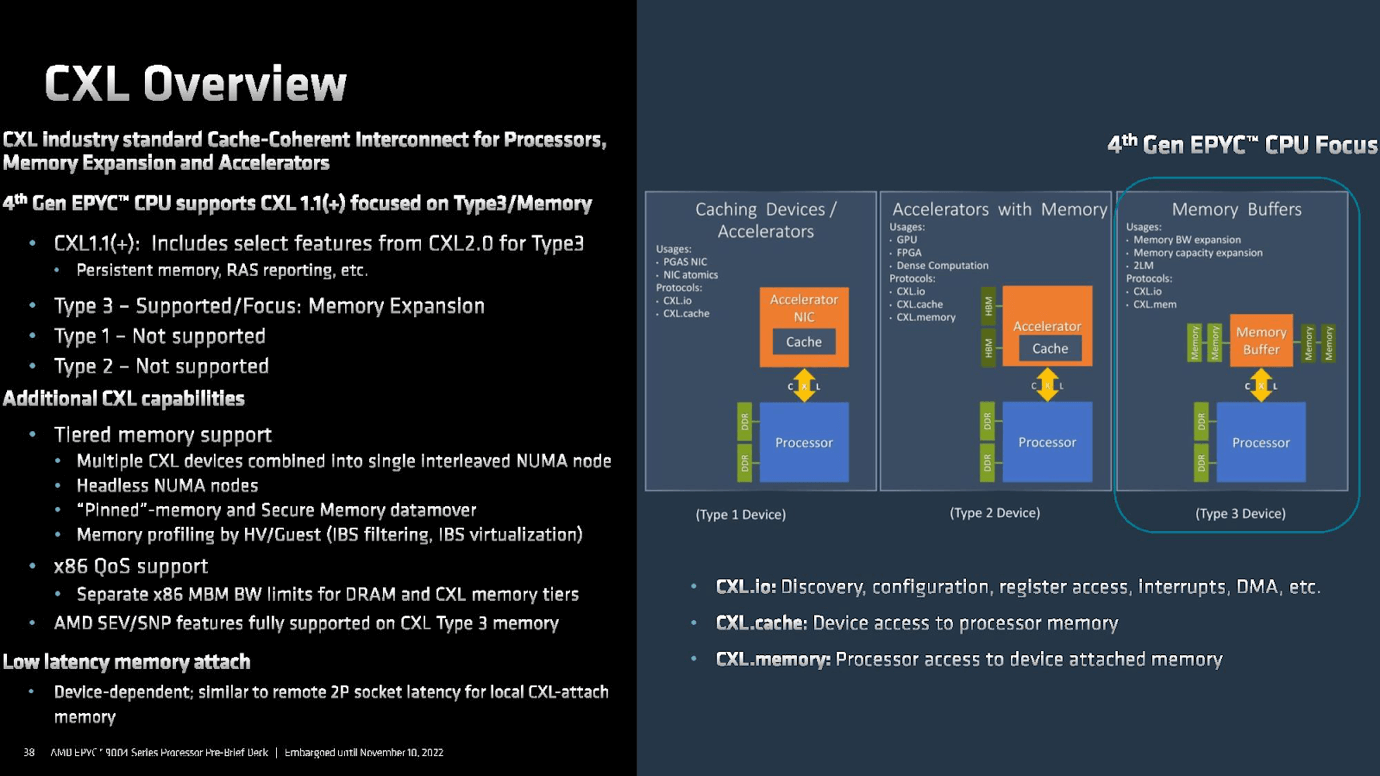

One of the most important additions is support for CXL 1.1 with some features from CXL 2.0 for Type-3 devices. It allows the CPU to address remote memory (Type 3 CXL devices – Memory Expansion) as local. Additionally, CXL can combine multiple expansion devices into a single interleaved NUMA node, and it also supports headless NUMA nodes. These Type 3 memory expansion drives are directly connected to the PCIe bus but will be networked before long.

One example of a Type 3 CXL device is Samsung’s Memory-Semantic SSD. It combines DRAM cache with NAND non-volatile storage much like many SSDs on the market today, but it’s accessed not via NVMe or SCSI but rather DRAM protocols, at DRAM speeds and latencies. While the huge cache buffers IO boosting random read performance by up to 20x, the drive’s controller flushes data to NAND.

Such solutions further blur the line between Storage and Memory. And their next generations will allow to disaggregate memory resources and interact with them as flexibly as with SAN. But it also creates new challenges for OS and system software developers, as data availability issues and requests for familiar services remain.

Xinnor plans to continue developing xiRAID and stay the performance leader on new generations of PCIe 5 compatible hardware. In addition, we are building products that allow us to work efficiently with disaggregated storage resources with heavy focus on HPC and AI tasks.

In the medium term, we plan to work on achieving high efficiency in storage, transport and processing using xPUs, and the development of the CXL specification and the emergence of real devices based on it, combined with our key development competencies, will allow us to move forward quickly.